Deep learning: the new frontier in visual effects production

Artificial intelligence (AI) and deep learning technologies are disrupting our world in unprecedented ways. From transport and infrastructure, to marketing and fintech; self-learning machines are increasingly being deployed to challenge the status quo.

Deep learning enables systems to learn and predict outcomes without explicit programming. Instead of writing algorithms and rules that make decisions directly, machine learning teaches computer systems to make decisions from large datasets. It creates models that represent and generalise patterns in the data you use to train it, and uses those models to interpret and analyse new information.

Examples of this technology include chatbots, spam filters, self-driving cars and a range of other systems. It is gradually encroaching on all areas of our daily lives – the home, the daily commute, the workplace – changing how we perform daily tasks.

Deep learning in visual effects

At Foundry, we employ engineers and algorithmic developers, whose task it is to research and develop ground-breaking technologies for the creative industries. We have strategic partnerships with UK and European research institutes and universities, which cements our work in academia and helps us to push the boundaries of what’s achievable in visual effects and beyond. It is also a fantastic way of inspiring students to pursue careers in cutting-edge visual effects, dealing with real-world problems that have the potential to enter post production in the near future.

We'd like to highlight two of the internships we're running this year, exploring the impact of AI on video editing and image processing, understanding and manipulation. We believe AI and deep learning could have a massive impact on visual effects – as it is already doing so in other industries – and these research projects are allowing us to dip our toes into the water of this exciting field.

Project #1: Traditional Effects

The first project is exploring the potential of deep learning to solve an everyday challenge in visual effects—how to remove noise from images and videos in post-production. Decades of research and handcrafted algorithms have enabled artists to clean up images through signal process analysis, modifying camera footage in ways to dampen down unwanted noise and create a clean image on the output. This standard VFX process has been in place since the 1980s, and there are numerous tools at artists’ disposal to perform the task.

PhD Student Da Chen, from Bath University, joined the NukeX team at Foundry to test whether deep learning can be used to remove noise in our Nuke compositing software. Deep learning has enabled us to take hundreds of thousands of examples of noisy input images – and images without the noise – and train a neural network how to map from the noisy image to a clean image on the output, quickly and efficiently.

Rather than painstakingly handcrafting the signal processing analysis, it is possible to teach a machine to produce results automatically. This has the potential to transform the way artists work with noisy footage, automating the task and freeing up more time for creativity.

Project #2: Seeing the Light

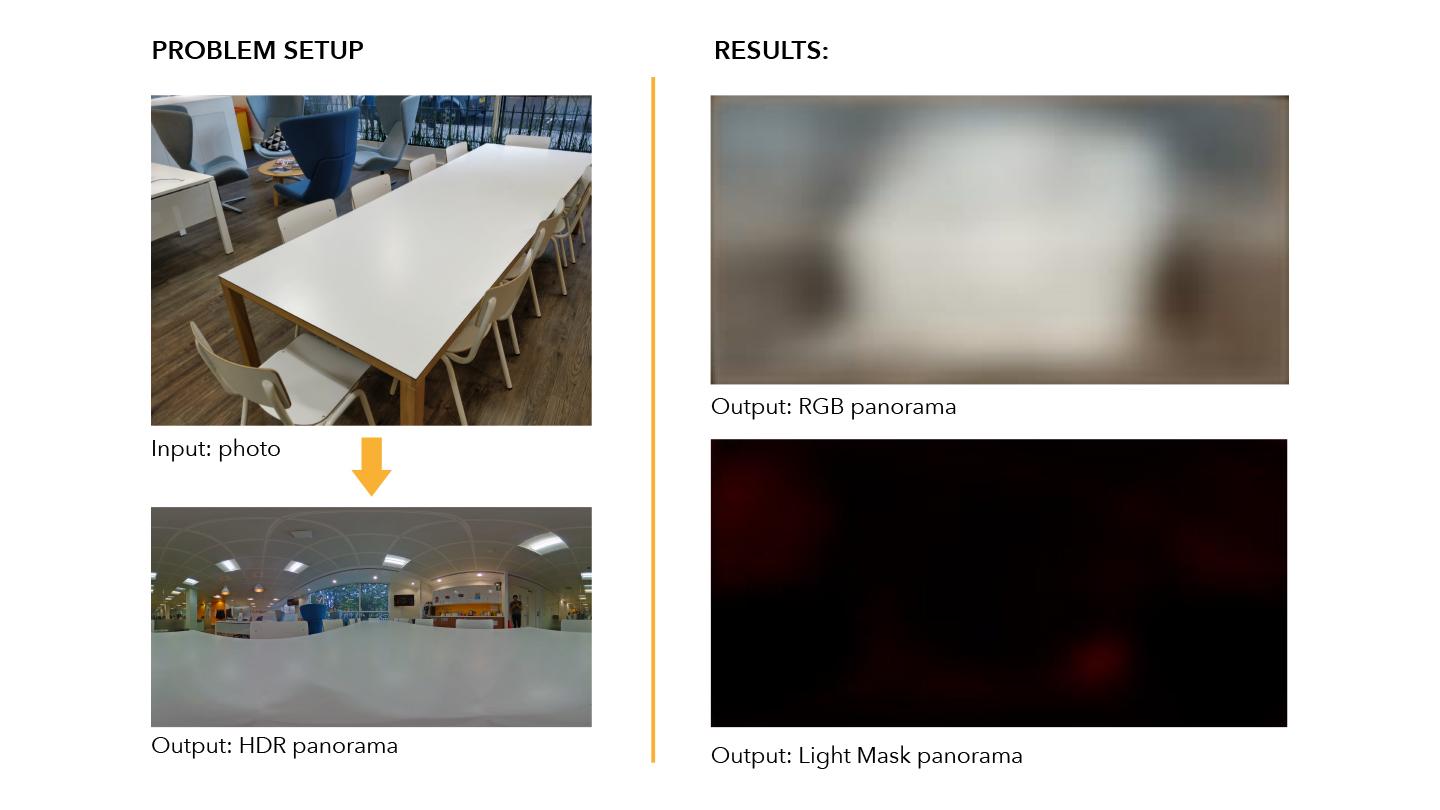

This second project has tested whether we can harness AI to perform tasks that wouldn’t otherwise be possible. Can we solve traditional problems from VFX in new ways, using deep learning as a magic box that solves the problem for us?

We tested whether it is possible to ‘hallucinate’ physical lighting from just a single picture so that digital models can be added photorealistically. In movies, real world lighting has to be carefully captured using light probes. From our work in visual effects, we know that this lighting is critical to making characters and materials appear physically correct. We wanted to explore whether it was possible to train an AI system to reproduce this knowledge.

PhD student Corneliu Ilisescu, from University College London, joined the team that is developing new technologies for cinematic Augmented Reality (AR) experiences. Together the team has explored how deep learning can be used to recognise lighting using thousands of examples where the lighting is known, and training the network to set up lighting for AR content.

This project has explored how AI can make light capture simpler and more accessible. We want to find out whether it is possible leverage the vast amounts of data generated in VFX to make all forms of production and post-production a faster and more effective process.

A window into the future

It isn’t clear yet how AI and deep learning will change the visual effects industry. There are lots of small areas – of which these internships are two examples – which have scope to be improved or made more efficient through AI technology. On the CG side, tasks like character modelling, asset modelling, asset material representation and lighting representation could be affected; while in video, 3D modelling and understanding scenes through the lens could also reap the benefits of machine learning.

The world of entertainment has marched on in leaps and bounds over the past 20 years. In Foundry’s history, we have been at the forefront of massive improvements in the levels of immersion of visual representations. From 2D cinema, to 3D, AR and VR content; emerging technologies have been a catalyst for these developments.

Although the breadth and quality of content has changed, the number of people involved in the projects themselves remains roughly the same. Where AI and deep learning will have the most impact is in taking the “grunt” out of post-production work, automating the more mundane tasks and opening new doors of perception and creativity.