Tackling the anti-heroes of compositing with NukeX and HieroPlayer.

Superheroes come in all shapes and sizes, and the smallest ones are often the mightiest. That’s definitely the case in Epic Tails (originally Pattie et la colère de Poséidon), a family adventure feature animation film that tells the story of super-smart mouse Pattie and her ginger feline friend Sam. Together, they're on a mission to save the ancient Grecian port city of Yolkos from the wrath of sea god Poseidon. On their journey they meet with gods and anthropomorphized creatures from the Greek mythology.

The film was animated by TAT Productions, a studio that was founded in 2000 by David Alaux, Eric and Jean-François Tosti. Based in Toulouse, France, the studio's most notable project, The Jungle Bunch, won an International Emmy Award and has been broadcast in more than 200 countries and translated in 50 languages. Over the past five years, TAT Productions has grown to be one of the most prolific European studios in the field of cinema and has been aiming to make a film every two years. These include: The Jungle Bunch (2017), Terra Willy (2019), and Pil’s Adventures (2021).

When working on Epic Tails, TAT Productions combined the power of NukeX, our advanced industry-standard compositing toolkit, with HieroPlayer's capability to review shots in context.

We sat down with Technical Director Romain Teyssonneyre to find out more about the studio's creative process and share insights into its workflows and solutions.

How it all started

“The studio moved from After Effects to NukeX in 2020 for Pil’s adventures," explains Romain. "We gave ourselves six months of R&D for building a fresh new workflow with the help of two technical artists: Jérômes Desplas and Kevin Kergoat. After that, we could enter into production more serenely.”

Since then, the pipeline is now imagined and built by Compositing Pipeline Supervisor Colin Wibaux with the help of CG Developer Romain Truchon. At the moment, Justine Thibaut is the Compositing Supervisor of the studio. “She has an artistic view on all the projects and also helps to drive the pipeline from the production side,” says Romain.

Before moving to Nuke, the studio wasn’t able to work as flexibly with its CG renders. For example, having rendered-in cutouts, instead of the option to use deep, meaning they had limited options for adjusting the lighting post-render. And so they chose NukeX.

Romain explains the motivation behind moving to Foundry’s advanced compositing toolset, NukeX, saying: “We wanted to have the possibility of working in Back To Beauty from V-Ray, more specifically to work on lights AOVs added instead of working on beauty directly. We also wanted to work with deep compositing and avoid rendering shots multiple times just because of the cutout mask. Additionally, we wanted to make use of cryptomattes, Viewport 3D, and DeepToPoints.”

Exploring the 3D pipeline

First, let’s have a look at TAT Productions’ 3D pipeline before going deeper into their compositing pipeline. The studio used 3ds Max and V-ray for lighting and rendering.

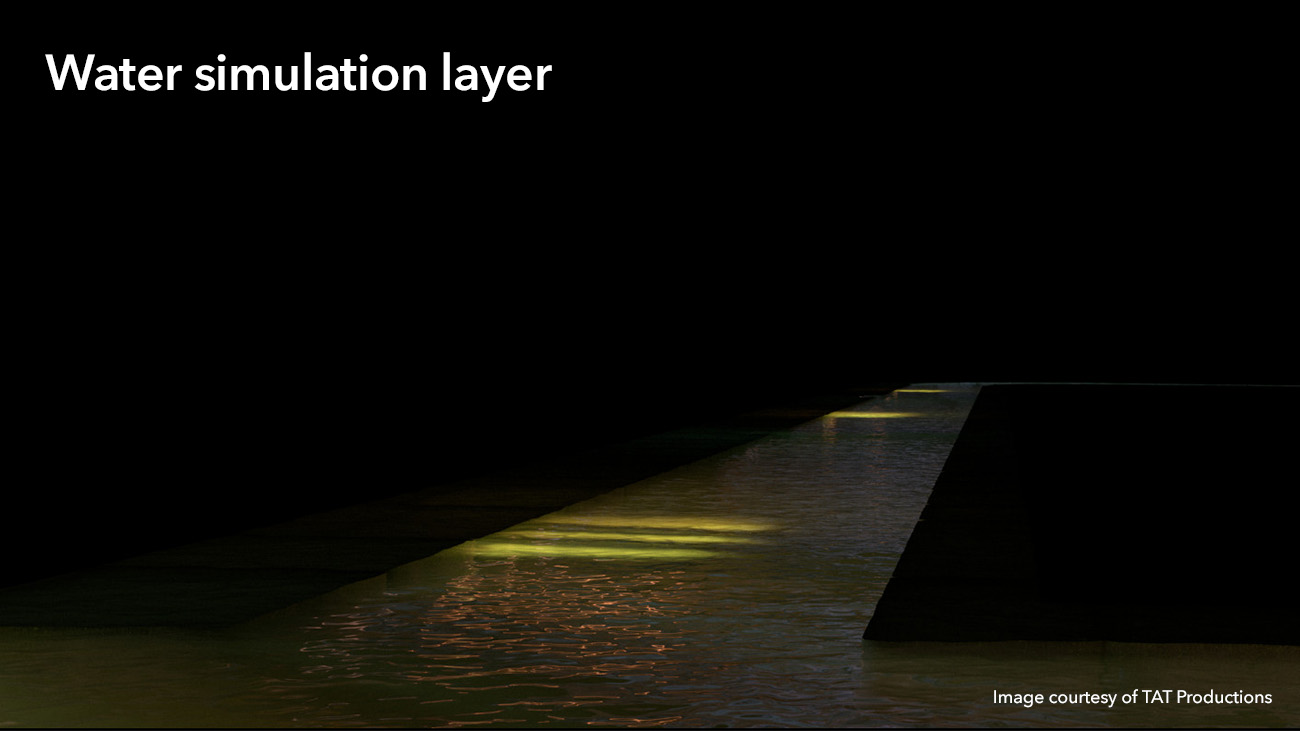

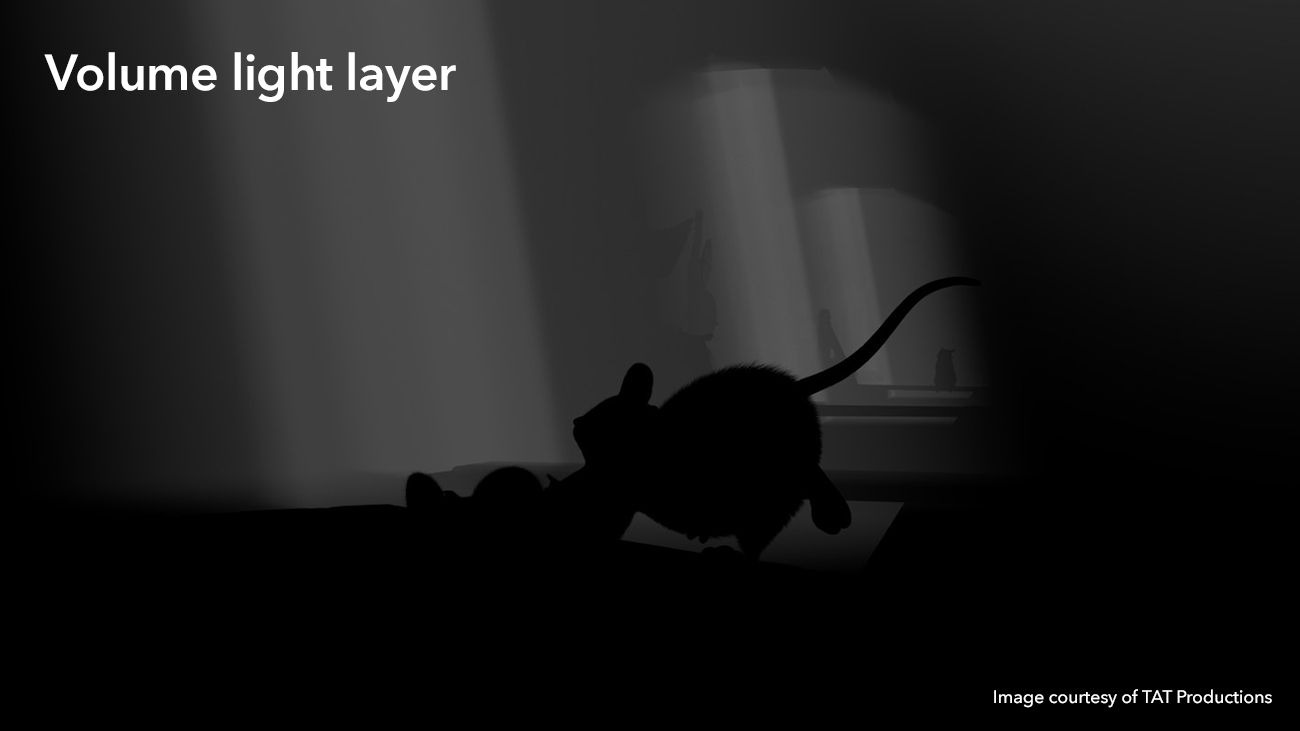

The VFX team developed an internal tool to separate every element in the image on a dedicated layer that they named La PassTech, giving them full creative control in the comp.

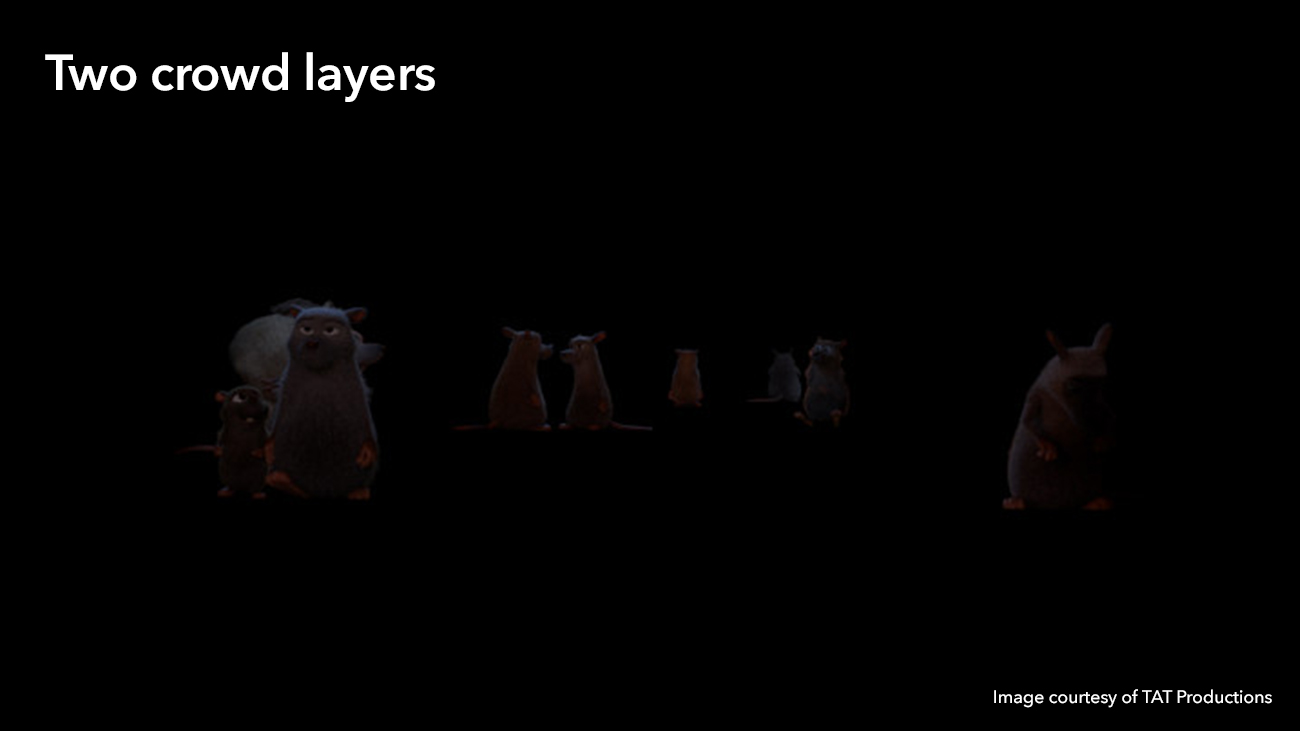

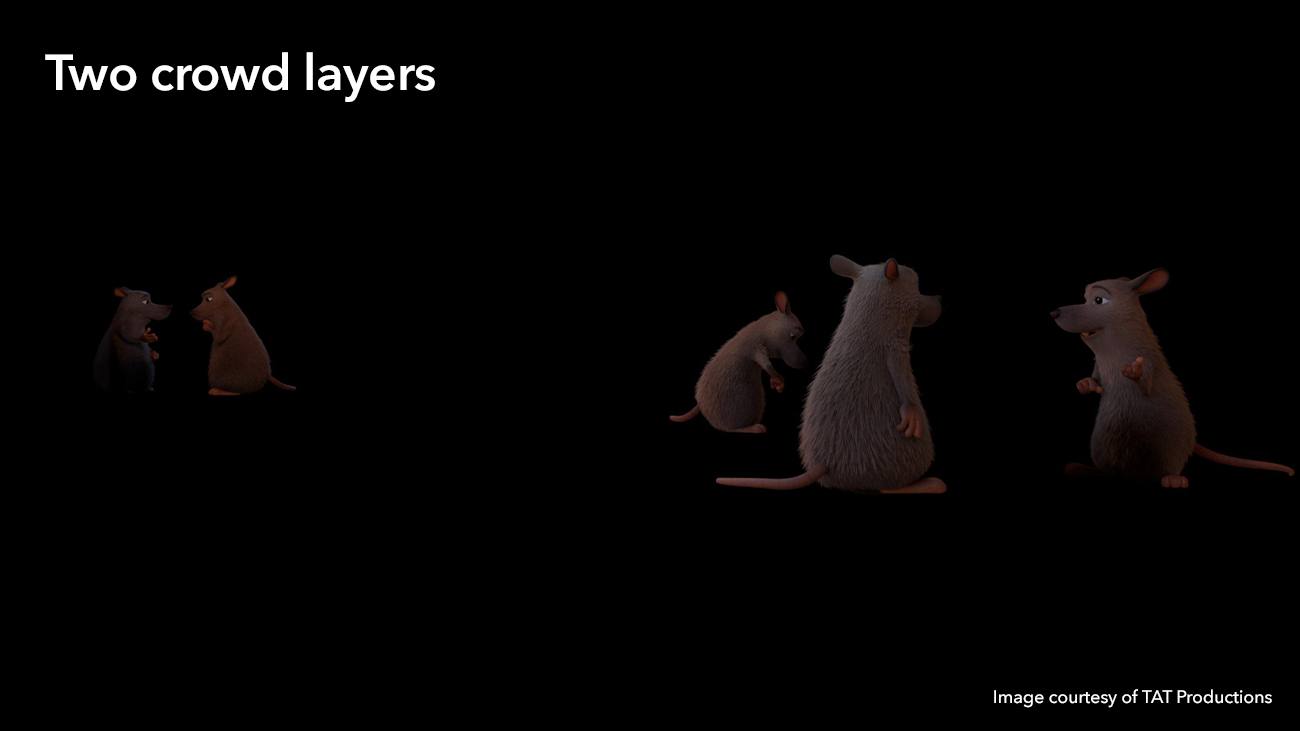

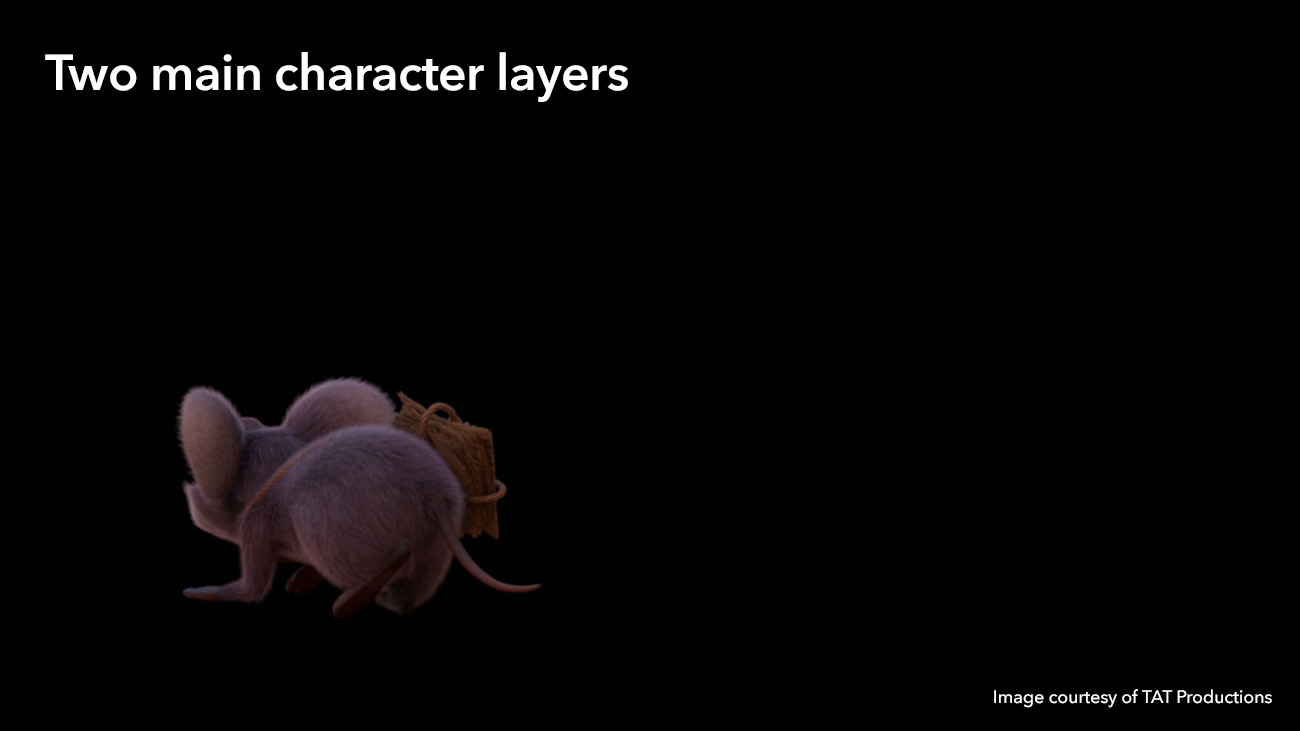

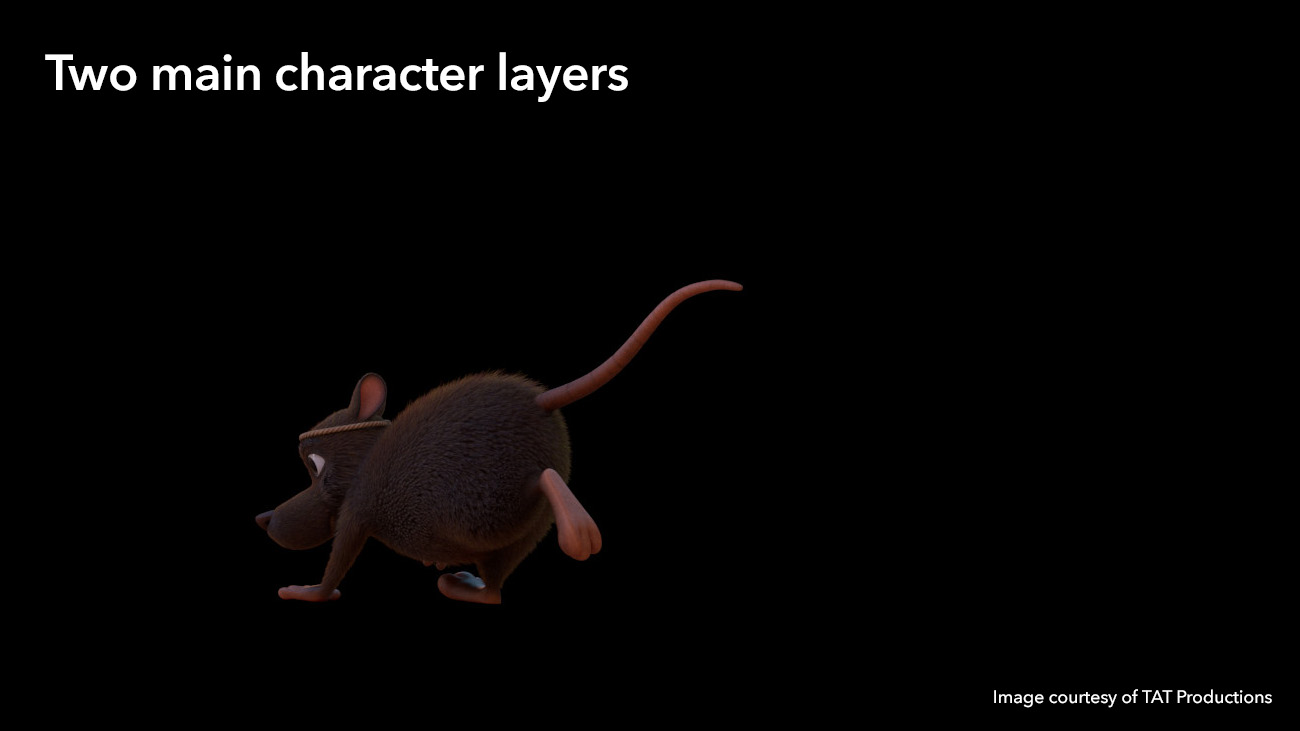

They used the tool to separate each main character, groups of secondary characters or crowd, foreground, background, fires, smokes, waters, and volume lights.

Each of these layers is rendered within three passes with many different AOVs in a multi-channel EXR.

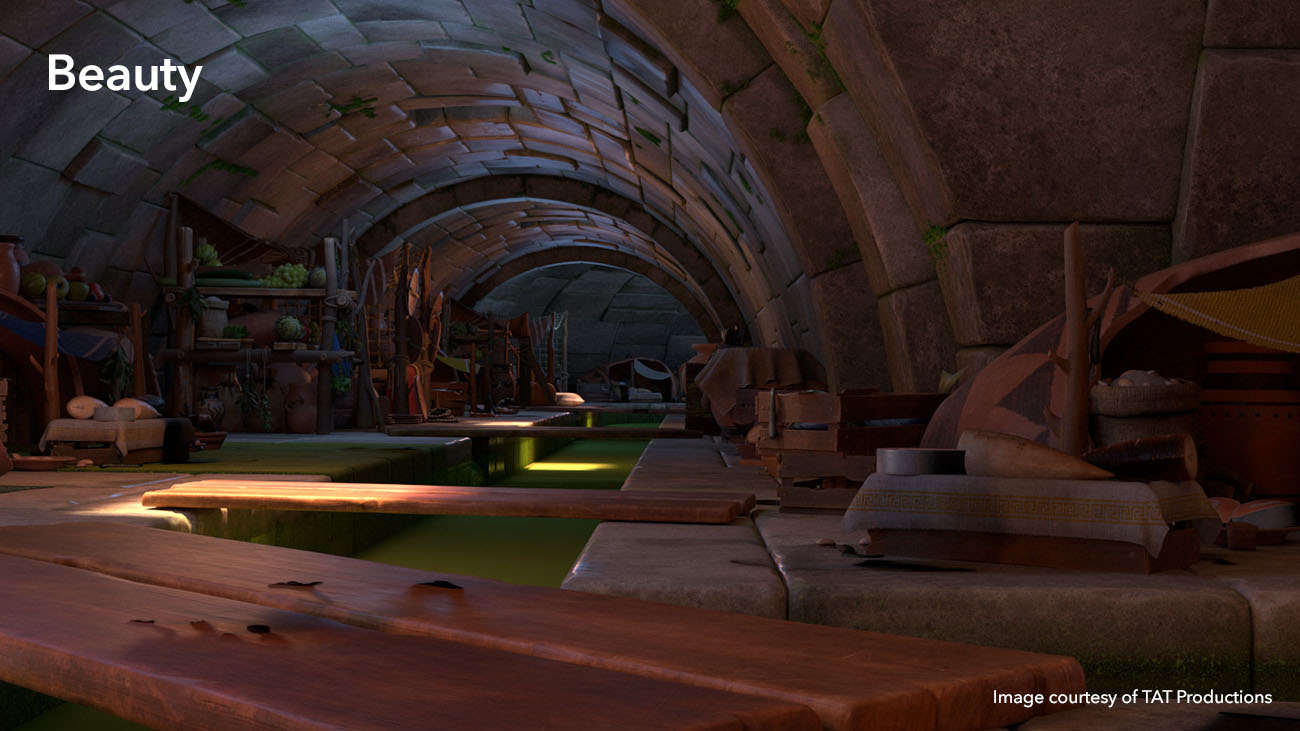

Beautify it and render!

These multi-channel EXRs can contain up to six light groups to make up the final image, as well as technical AOVs like Albedo, SSS and Velocity.

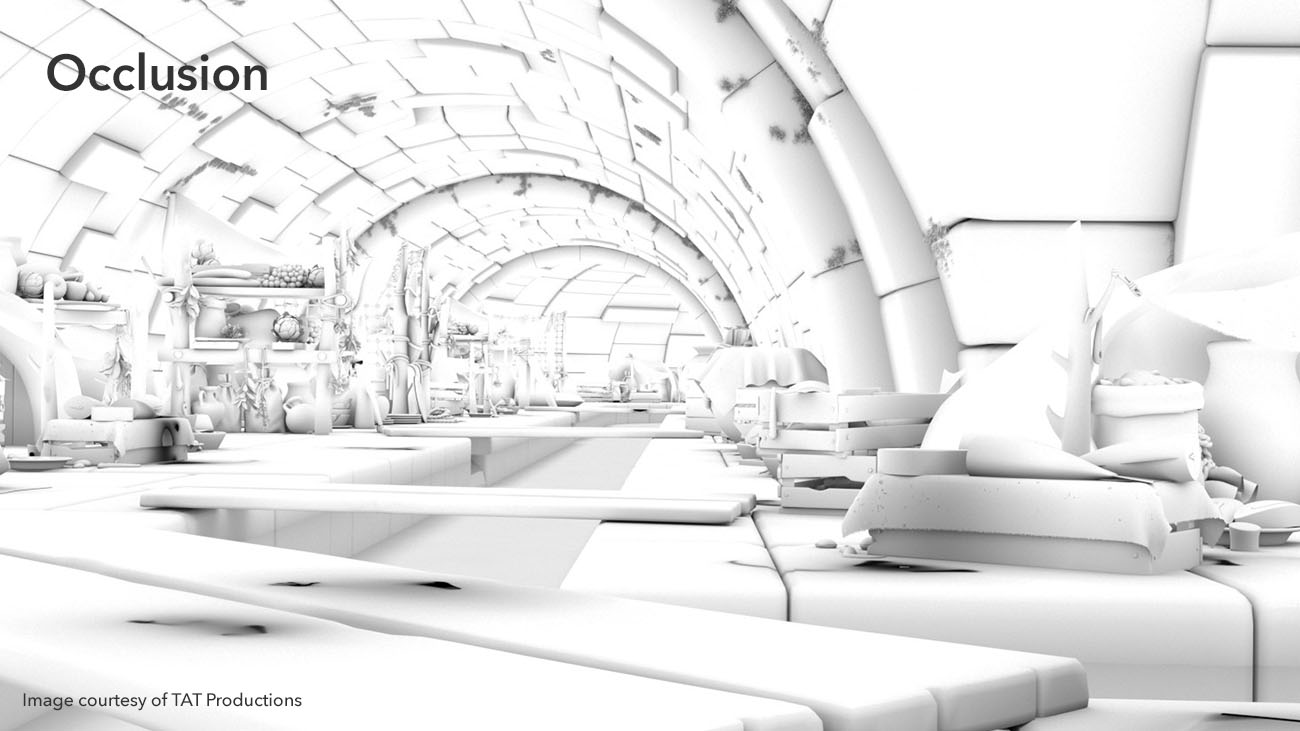

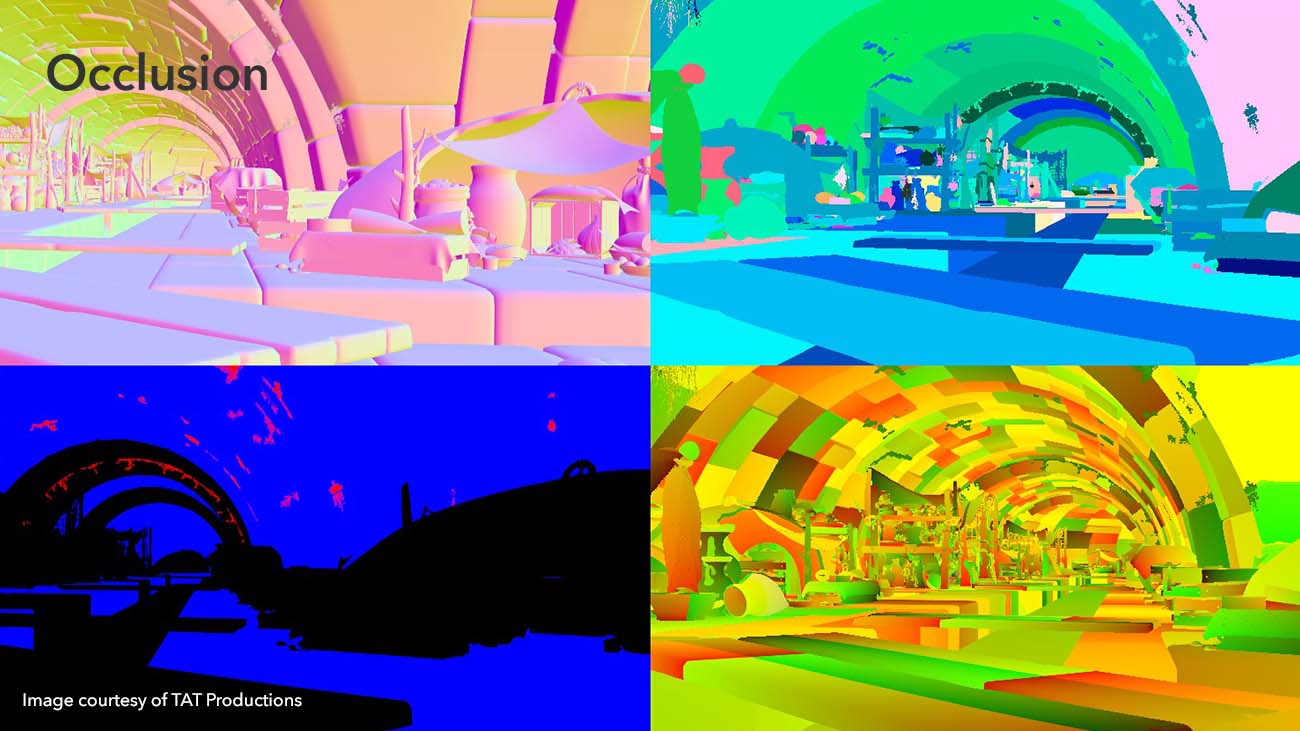

For occlusion, the studio used a custom override shader to manage different distance cases like environments, characters, fur or opacity maps. The pass above also contains many technical AOVs such as cryptomattes, normals, position reference passes (PRefs), and custom objects IDs.

Finally, the team rendered a Deep image that only contains the Z information.

“As we can see, our rendering process is pretty heavy," says Romain. "We have an average render time of between six and eight hours for a final image — this can increase up to more than 15 hours for big shots. We have an internal render farm of 280 nodes and 200 workstations and everything is managed with Deadline,” Romain tells us.

But what happens when a shot is ready to render? TAT Productions implemented an internal process for precomping that launches a Nuke job which stacks passes just for rendering and AOVs verification. “Here, instead of importing from 3D scenes, we also extract metadata from EXR to export a Nuke Camera that will match perfectly to the renders,” Romain explains.

Given the complexity of our compositing tool, every studio chooses to use it differently, depending on their own challenges and workflows. TAT Productions gave us an insight into the pipeline they built around NukeX and the tools they developed in order to improve their workflow. One of the tools they developed was The Lego, which is used used for shot creation.

“Our first tool was for creating a shot and importing all the image sequences," explains Romain. "We had to inform the shot name, therefore we had the Key Shot, the first shot that led the settings for the rest of the sequence, or a Baby Shot, where all the settings were copied from another shot and just the content was updated.”

On the right of the Node Graph, we can see the imported layers. Here, Lego will duplicate — or delete layer templates depending on the shot content which could range from the number of backgrounds or crowds to characters layers or FX. Here, the artist can access every AOV in every layer.

In the middle, we have global controllers for all of the elements of the shots, such as motion blur, fog, depth of field, and light wraps.

The left-hand side area was used for work specific to the shot like digital matte painting (DMP), particles, and skies.

Above is Feng-shui, a tool the team created and used for Holdout and order management. For large comps with a lot of layers, this is a great quality-of-life tool for the artists, enabling them to access, inspect, and change the order of the layers, all in a simple window.

Inspired by Bokeh, an essential tool brought by the Nuke 14.0 release, TAT Productions created Optical Defocus — an in-house Depth of Field tool based on ZDefocus.

Finally, they created Position Matte, a tool based on Deep and Position World information that allowed the studio to create custom Position Mattes, Z Depth, 3D noise, or Vertical Mattes. All these tools do the math operation in Deep, therefore the anti-aliasing is preserved, and they have little to no edging artifacts. “It’s all linked to the shot camera — extracted from EXR metadata — so we don’t have to manually rotoscope,” says Romain.

On to reviewing

“All of our shots were rendered with NukeX in Deadline with a light denoiser," Romain explains. "We also created a proxy video for all our EXR sequences for performance reviews.”

When it came to reviewing, the team turned to HieroPlayer, Foundry’s flexible — and free — review tool that enables artists to review shots in context and compare render versions efficiently.

“When HieroPlayer became available with Nuke and NukeX, we integrated it into our pipeline to review multiple shots with multiple timelines,” says Romain. The team at TAT Productions used our tool for lighting video, lighting precomped EXRs, comp videos, denoised EXRs, comp EXRs, and denoised videos.

As shown above, HieroPlayer enabled the team to check an entire sequence at the same time and to visualize every step.

“Moreover, we linked this to our internal production tracker, so we could directly change the status of the shot,” says Romain.

Let’s get creative

One of the most technical challenges the team had to manage on Epic Tails was the Zeus sequence. In that particular sequence, King of the gods Zeus is looking at the main character's adventures in his basin and he’s playing with it. But what were the challenges?

“On the 3D side, we had separate scenes but, because of the complexity of the content, we couldn’t afford to make Level of Detail (LODs) or other assets,” Romain explains. “So we rendered each scene separately and reunited them in compositing while still managing FX, camera movement and parallax.”

We asked the team at TAT Productions to share with us some of their favorite scenes they worked on. Keep on scrolling to unravel the fascinating visual history they created.

Sailing to the future

So, what does the future look like at TAT Productions? “We're actually working on our fifth and sixth films, The Jungle Bunch 2 (2023) and Pets on a train (2025), as well as on the famous Netflix series, Asterix, which is scheduled for 2024,” says Romain.

The company plans to use NukeX in future projects to carry on creating the magical and immersive stories that it is known for. “We have created all these custom tools for multiple projects and maintained them across multiple NukeX versions," says Romain. "We plan to keep on working with NukeX on this workflow and constantly improve it. We're also looking closely at ACES & HDR workflows.”